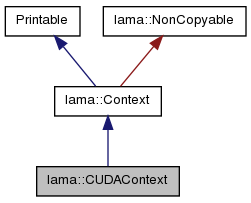

CUDAContext initializes the CUDA device with the given number. More...

#include <CUDAContext.hpp>

Public Types | |

| enum | ContextType { Host, CUDA, OpenCL, MaxContext } |

| Enumeration type for the supported contexts. More... | |

Public Member Functions | |

| virtual | ~CUDAContext () |

| The destructor destroys this CUDA device, and frees the initialized CUDA device if needed. | |

| int | getDeviceNr () const |

| virtual bool | canUseData (const Context &other) const |

| Each host contxt can data of each other host contxt. | |

| virtual void | writeAt (std::ostream &stream) const |

| This method writes info about the device in the output stream The method should be overwritten by base classes to give more specific information about the device that might be useful for logging. | |

| virtual void * | allocate (const size_t size) const |

| virtual void | allocate (ContextData &contextData, const size_t size) const |

| This method allocates memory and must be implemented by each Context. | |

| virtual void | free (void *pointer, const size_t size) const |

| This method free's allocated data allocated by this allocator. | |

| virtual void | free (ContextData &contextData) const |

| This method free's allocated data allocated by this allocator. | |

| virtual void | memcpy (void *dst, const void *src, const size_t size) const |

| Memory copy within the same context. | |

| virtual std::auto_ptr< SyncToken > | memcpyAsync (void *dst, const void *src, const size_t size) const |

| virtual bool | cancpy (const ContextData &dst, const ContextData &src) const |

| Checks if this Context can copy from src to dst. | |

| virtual void | memcpy (ContextData &dst, const ContextData &src, const size_t size) const |

| Memory copy. | |

| virtual std::auto_ptr< SyncToken > | memcpyAsync (ContextData &dst, const ContextData &src, const size_t size) const |

| virtual void | enable (const char *filename, int line) const |

| The CUDA interface used for the implementation requires that the device must be set via a setDevice routine. | |

| virtual void | disable (const char *filename, int line) const |

| Disable computations in the context. | |

| std::auto_ptr < CUDAStreamSyncToken > | getComputeSyncToken () const |

| std::auto_ptr < CUDAStreamSyncToken > | getTransferSyncToken () const |

| virtual std::auto_ptr< SyncToken > | getSyncToken () const |

| Implementation for Context::getSyncToken. | |

| ContextType | getType () const |

| Method to get the type of the context. | |

| bool | operator== (const Context &other) const |

| This predicate returns true if two contexts can use same data and have the same type. | |

| bool | operator!= (const Context &other) const |

| The inequality operator is just the inverse to operator==. | |

| class LAMAInterface & | getInterface () const |

| This method returns interface for a given context. | |

Protected Member Functions | |

| CUDAContext (int device) | |

| Constructor for a context on a certain device. | |

Protected Attributes | |

| ContextType | mContextType |

| bool | mEnabled |

| if true the context is currently accessed | |

| const char * | mFile |

| File name where context has been enabled. | |

| int | mLine |

| Line number where context has been enabled. | |

Private Member Functions | |

| void | memcpyFromHost (void *dst, const void *src, const size_t size) const |

| void | memcpyToHost (void *dst, const void *src, const size_t size) const |

| void | memcpyFromCUDAHost (void *dst, const void *src, const size_t size) const |

| void | memcpyToCUDAHost (void *dst, const void *src, const size_t size) const |

| std::auto_ptr< SyncToken > | memcpyAsyncFromHost (void *dst, const void *src, const size_t size) const |

| std::auto_ptr< SyncToken > | memcpyAsyncToHost (void *dst, const void *src, const size_t size) const |

| std::auto_ptr< SyncToken > | memcpyAsyncFromCUDAHost (void *dst, const void *src, const size_t size) const |

| std::auto_ptr< SyncToken > | memcpyAsyncToCUDAHost (void *dst, const void *src, const size_t size) const |

| LAMA_LOG_DECL_STATIC_LOGGER (logger) | |

Private Attributes | |

| int | mDeviceNr |

| number of device for this context | |

| CUdevice | mCUdevice |

| data structure for device | |

| CUcontext | mCUcontext |

| data structure for context | |

| CUstream | mTransferStream |

| stream for memory transfers | |

| CUstream | mComputeStream |

| stream for asynchronous computations | |

| cusparseHandle_t | mCusparseHandle |

| handle to cusparse library | |

| std::string | mDeviceName |

| name set during initialization | |

| Thread::Id | mOwnerThread |

| int | mNumberOfAllocates |

| variable counts allocates | |

| long long | mNumberOfAllocatedBytes |

| variable counts allocated bytes on device | |

| long long | mMaxNumberOfAllocatedBytes |

| variable counts the maximum allocated bytes | |

Static Private Attributes | |

| static int | currentDeviceNr = -1 |

| number of device currently set for CUDA | |

| static int | numUsedDevices = 0 |

| total number of used devices | |

| static size_t | minPinnedSize = std::numeric_limits<size_t>::max() |

Friends | |

| class | CUDAContextManager |

Detailed Description

CUDAContext initializes the CUDA device with the given number.

CUDAContext initializes the CUDA device with the given number according to the RAII Programming Idiom (http://www.hackcraft.net/raii/). The device is initialized in the constructor and cleaned up in the destructor. CUDAContext uses a static counter to avoid multiple device initializations.

Member Enumeration Documentation

enum lama::Context::ContextType [inherited] |

Enumeration type for the supported contexts.

The type is used to select the appropriate code that will be used for the computations in the context.

The same context type does not imply that two different contexts can use the same data. Two CUDA contexts might allocate their own data where data must be transfered explicitly.

Constructor & Destructor Documentation

| lama::CUDAContext::~CUDAContext | ( | ) | [virtual] |

The destructor destroys this CUDA device, and frees the initialized CUDA device if needed.

destructor

References lama::CUDAContext_cusparseHandle, currentDeviceNr, LAMA_CHECK_CUBLAS_ERROR, LAMA_CUDA_DRV_CALL, mComputeStream, mCUcontext, mDeviceNr, mMaxNumberOfAllocatedBytes, mNumberOfAllocatedBytes, mNumberOfAllocates, mTransferStream, and numUsedDevices.

| lama::CUDAContext::CUDAContext | ( | int | device | ) | [protected] |

Constructor for a context on a certain device.

constructor

- Parameters:

-

device the number of the CUDA device to initialize. [IN]

- Exceptions:

-

Exception if the device initialization fails.

If device is DEFAULT_DEVICE_NUMBER, the device number is taken by the environment variable LAMA_DEVICE. If this variabe is not set, it will take device 0 as default.

References lama::CUDAContext_cusparseHandle, currentDeviceNr, disable(), enable(), lama::Thread::getSelf(), LAMA_CUBLAS_CALL, LAMA_CUDA_DRV_CALL, LAMA_CUSPARSE_CALL, mComputeStream, mCUcontext, mCUdevice, mDeviceName, mDeviceNr, mMaxNumberOfAllocatedBytes, mNumberOfAllocatedBytes, mNumberOfAllocates, mOwnerThread, mTransferStream, and numUsedDevices.

Member Function Documentation

| void * lama::CUDAContext::allocate | ( | const size_t | size | ) | const [virtual] |

References LAMA_ASSERT_ERROR, LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, LAMA_REGION, lama::max(), mMaxNumberOfAllocatedBytes, mNumberOfAllocatedBytes, and mNumberOfAllocates.

Referenced by allocate().

| void lama::CUDAContext::allocate | ( | ContextData & | contextData, |

| const size_t | size | ||

| ) | const [virtual] |

This method allocates memory and must be implemented by each Context.

- Parameters:

-

[in] size is the number of bytes needed

- Returns:

- pointer to the allocated data, NULL if not enough data is available This method allocates memory and must be implemented by each Context. The pointer to the allocated memory is stored in contextData.pointer.

- Parameters:

-

[out] contextData the ContextData the memory should be allocated for [in] size is the number of bytes needed

- Returns:

- pointer to the allocated data, NULL if not enough data is available

Implements lama::Context.

References allocate(), lama::Context::ContextData::pointer, and lama::Context::ContextData::setPinned().

| bool lama::CUDAContext::cancpy | ( | const ContextData & | dst, |

| const ContextData & | src | ||

| ) | const [virtual] |

Checks if this Context can copy from src to dst.

- Parameters:

-

[in] dst the dst ContextData [in] src the src ContextData

- Returns:

- if this Context can copy from src to dst.

Implements lama::Context.

References lama::Context::ContextData::context, lama::Context::CUDA, and lama::Context::Host.

Referenced by memcpy(), and memcpyAsync().

| bool lama::CUDAContext::canUseData | ( | const Context & | other | ) | const [virtual] |

Each host contxt can data of each other host contxt.

Implements lama::Context.

References lama::Context::CUDA, lama::Context::getType(), and mDeviceNr.

| void lama::CUDAContext::disable | ( | const char * | file, |

| int | line | ||

| ) | const [virtual] |

Disable computations in the context.

Reimplemented from lama::Context.

References currentDeviceNr, lama::Thread::getSelf(), and LAMA_CUDA_DRV_CALL.

Referenced by CUDAContext().

| void lama::CUDAContext::enable | ( | const char * | filename, |

| int | line | ||

| ) | const [virtual] |

The CUDA interface used for the implementation requires that the device must be set via a setDevice routine.

This method takes care of it if this context device is not the current one. So this method must be called before any CUDA code is executed (includes also memory transfer routines).

Reimplemented from lama::Context.

References currentDeviceNr, lama::Thread::getSelf(), LAMA_CUDA_DRV_CALL, mCUcontext, and mDeviceNr.

Referenced by CUDAContext().

| void lama::CUDAContext::free | ( | void * | pointer, |

| const size_t | size | ||

| ) | const [virtual] |

This method free's allocated data allocated by this allocator.

- Parameters:

-

[in] pointer is the pointer to the allocated data. [in] size is the number of bytes that have been allocated with pointer.

The pointer must have been allocated by the same allocator with the given size.

Implements lama::Context.

References LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, LAMA_REGION, mNumberOfAllocatedBytes, and mNumberOfAllocates.

Referenced by free().

| void lama::CUDAContext::free | ( | ContextData & | contextData | ) | const [virtual] |

This method free's allocated data allocated by this allocator.

- Parameters:

-

[in] contextData which holds the pointer to the allocated data.

The pointer must have been allocated by the same allocator.

Implements lama::Context.

References lama::Context::ContextData::context, free(), lama::Context::getType(), LAMA_ASSERT_EQUAL_ERROR, lama::Context::ContextData::pointer, and lama::Context::ContextData::size.

| std::auto_ptr< CUDAStreamSyncToken > lama::CUDAContext::getComputeSyncToken | ( | ) | const |

References lama::CUDAStreamSyncToken, and mComputeStream.

Referenced by lama::LUSolver::computeLUFactorization().

| int lama::CUDAContext::getDeviceNr | ( | ) | const [inline] |

Referenced by memcpy(), and lama::CUDAHostContextManager::setAsCurrent().

| const LAMAInterface & lama::Context::getInterface | ( | ) | const [inherited] |

This method returns interface for a given context.

References lama::LAMAInterfaceRegistry::getInterface(), lama::LAMAInterfaceRegistry::getRegistry(), lama::Context::getType(), and LAMA_ASSERT_DEBUG.

| std::auto_ptr< SyncToken > lama::CUDAContext::getSyncToken | ( | ) | const [virtual] |

Implementation for Context::getSyncToken.

Implements lama::Context.

References lama::CUDAStreamSyncToken, and mComputeStream.

| std::auto_ptr< CUDAStreamSyncToken > lama::CUDAContext::getTransferSyncToken | ( | ) | const |

References lama::CUDAStreamSyncToken, and mTransferStream.

| ContextType lama::Context::getType | ( | ) | const [inline, inherited] |

Method to get the type of the context.

Referenced by lama::PGASContext::cancpy(), lama::CUDAHostContext::cancpy(), lama::HostContext::canUseData(), canUseData(), lama::PGASContext::free(), lama::CUDAHostContext::free(), free(), lama::Context::getInterface(), lama::PGASContext::memcpy(), lama::CUDAHostContext::memcpy(), lama::PGASContext::memcpyAsync(), and lama::CUDAHostContext::memcpyAsync().

| lama::CUDAContext::LAMA_LOG_DECL_STATIC_LOGGER | ( | logger | ) | [private] |

Reimplemented from lama::Context.

| void lama::CUDAContext::memcpy | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [virtual] |

Memory copy within the same context.

param[in] dst pointer to the destination param[in] src pointer to the source param[in] size is the number of bytes to be copied.

This memory copies size values

Implements lama::Context.

References LAMA_CONTEXT_ACCESS, and LAMA_CUDA_DRV_CALL.

Referenced by memcpy().

| void lama::CUDAContext::memcpy | ( | ContextData & | dst, |

| const ContextData & | src, | ||

| const size_t | size | ||

| ) | const [virtual] |

Memory copy.

Copies the first size bytes from src->pointer to dst->pointer. the memory pointed to by src might be registered in a certain way to allow faster memory transfers. However the memory pointed to by src->pointer will not be altered by this function.

param[in] dst pointer to the destination param[in] src pointer to the source param[in] size is the number of bytes to be copied.

This memory copies size values

Implements lama::Context.

References cancpy(), lama::Context::ContextData::context, lama::Context::CUDA, getDeviceNr(), lama::Context::Host, lama::Context::ContextData::isPinned(), LAMA_ASSERT_ERROR, LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, LAMA_REGION, LAMA_THROWEXCEPTION, mCUcontext, memcpy(), memcpyFromCUDAHost(), memcpyFromHost(), memcpyToCUDAHost(), memcpyToHost(), minPinnedSize, lama::Context::ContextData::pointer, lama::Context::ContextData::setCleanFunction(), lama::Context::ContextData::setPinned(), and lama::Context::ContextData::size.

| std::auto_ptr< SyncToken > lama::CUDAContext::memcpyAsync | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [virtual] |

Implements lama::Context.

References lama::CUDAStreamSyncToken, LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and mTransferStream.

Referenced by memcpyAsync().

| std::auto_ptr< SyncToken > lama::CUDAContext::memcpyAsync | ( | ContextData & | dst, |

| const ContextData & | src, | ||

| const size_t | size | ||

| ) | const [virtual] |

Implements lama::Context.

References cancpy(), lama::Context::ContextData::context, lama::Context::CUDA, lama::Context::Host, lama::Context::ContextData::isPinned(), LAMA_ASSERT_ERROR, LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, LAMA_REGION, LAMA_THROWEXCEPTION, memcpyAsync(), memcpyAsyncFromCUDAHost(), memcpyAsyncFromHost(), memcpyAsyncToCUDAHost(), memcpyAsyncToHost(), minPinnedSize, lama::Context::ContextData::pointer, lama::Context::ContextData::setCleanFunction(), lama::Context::ContextData::setPinned(), and lama::Context::ContextData::size.

| std::auto_ptr< SyncToken > lama::CUDAContext::memcpyAsyncFromCUDAHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References lama::CUDAStreamSyncToken, LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and mTransferStream.

Referenced by memcpyAsync().

| std::auto_ptr< SyncToken > lama::CUDAContext::memcpyAsyncFromHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References memcpyFromHost().

Referenced by memcpyAsync().

| std::auto_ptr< SyncToken > lama::CUDAContext::memcpyAsyncToCUDAHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References lama::CUDAStreamSyncToken, LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and mTransferStream.

Referenced by memcpyAsync().

| std::auto_ptr< SyncToken > lama::CUDAContext::memcpyAsyncToHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References memcpyToHost().

Referenced by memcpyAsync().

| void lama::CUDAContext::memcpyFromCUDAHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and LAMA_REGION.

Referenced by memcpy().

| void lama::CUDAContext::memcpyFromHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and LAMA_REGION.

Referenced by memcpy(), and memcpyAsyncFromHost().

| void lama::CUDAContext::memcpyToCUDAHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and LAMA_REGION.

Referenced by memcpy().

| void lama::CUDAContext::memcpyToHost | ( | void * | dst, |

| const void * | src, | ||

| const size_t | size | ||

| ) | const [private] |

References LAMA_CONTEXT_ACCESS, LAMA_CUDA_DRV_CALL, and LAMA_REGION.

Referenced by memcpy(), and memcpyAsyncToHost().

| bool lama::Context::operator!= | ( | const Context & | other | ) | const [inherited] |

The inequality operator is just the inverse to operator==.

References lama::Context::operator==().

| bool lama::Context::operator== | ( | const Context & | other | ) | const [inherited] |

This predicate returns true if two contexts can use same data and have the same type.

References lama::Context::canUseData(), LAMA_ASSERT_EQUAL_DEBUG, and lama::Context::mContextType.

Referenced by lama::Context::operator!=().

| void lama::CUDAContext::writeAt | ( | std::ostream & | stream | ) | const [virtual] |

This method writes info about the device in the output stream The method should be overwritten by base classes to give more specific information about the device that might be useful for logging.

Reimplemented from lama::Context.

References mDeviceName, and mDeviceNr.

Friends And Related Function Documentation

friend class CUDAContextManager [friend] |

Field Documentation

int lama::CUDAContext::currentDeviceNr = -1 [static, private] |

number of device currently set for CUDA

Referenced by CUDAContext(), disable(), enable(), and ~CUDAContext().

CUstream lama::CUDAContext::mComputeStream [private] |

stream for asynchronous computations

Referenced by CUDAContext(), getComputeSyncToken(), getSyncToken(), and ~CUDAContext().

ContextType lama::Context::mContextType [protected, inherited] |

Referenced by lama::Context::Context(), lama::Context::operator==(), and lama::Context::~Context().

CUcontext lama::CUDAContext::mCUcontext [private] |

data structure for context

Referenced by CUDAContext(), enable(), memcpy(), and ~CUDAContext().

CUdevice lama::CUDAContext::mCUdevice [private] |

data structure for device

Referenced by CUDAContext().

cusparseHandle_t lama::CUDAContext::mCusparseHandle [private] |

handle to cusparse library

std::string lama::CUDAContext::mDeviceName [private] |

name set during initialization

Referenced by CUDAContext(), and writeAt().

int lama::CUDAContext::mDeviceNr [private] |

number of device for this context

Referenced by canUseData(), CUDAContext(), enable(), writeAt(), and ~CUDAContext().

bool lama::Context::mEnabled [mutable, protected, inherited] |

if true the context is currently accessed

Referenced by lama::Context::disable(), and lama::Context::enable().

const char* lama::Context::mFile [mutable, protected, inherited] |

File name where context has been enabled.

Referenced by lama::Context::disable(), and lama::Context::enable().

size_t lama::CUDAContext::minPinnedSize = std::numeric_limits<size_t>::max() [static, private] |

Referenced by memcpy(), and memcpyAsync().

int lama::Context::mLine [mutable, protected, inherited] |

Line number where context has been enabled.

Referenced by lama::Context::disable(), and lama::Context::enable().

long long lama::CUDAContext::mMaxNumberOfAllocatedBytes [mutable, private] |

variable counts the maximum allocated bytes

Referenced by allocate(), CUDAContext(), and ~CUDAContext().

long long lama::CUDAContext::mNumberOfAllocatedBytes [mutable, private] |

variable counts allocated bytes on device

Referenced by allocate(), CUDAContext(), free(), and ~CUDAContext().

int lama::CUDAContext::mNumberOfAllocates [mutable, private] |

variable counts allocates

Referenced by allocate(), CUDAContext(), free(), and ~CUDAContext().

Thread::Id lama::CUDAContext::mOwnerThread [private] |

Referenced by CUDAContext().

CUstream lama::CUDAContext::mTransferStream [private] |

stream for memory transfers

Referenced by CUDAContext(), getTransferSyncToken(), memcpyAsync(), memcpyAsyncFromCUDAHost(), memcpyAsyncToCUDAHost(), and ~CUDAContext().

int lama::CUDAContext::numUsedDevices = 0 [static, private] |

total number of used devices

Referenced by CUDAContext(), and ~CUDAContext().

The documentation for this class was generated from the following files:

- /home/brandes/workspace/LAMA/src/lama/cuda/CUDAContext.hpp

- /home/brandes/workspace/LAMA/src/lama/cuda/CUDAContext.cpp